I has always been fascinated by the OCR systems (Optical Character Recognition applications that are used to translate writings contained in images in real text). This applications are very useful (it is its main role) when it is necessary to digitalize some printed document, instead of type it manually from scratch.

There are many ways to implement it, in this case I decided to develop a simple classifier that given a symbol (upper case letter or number) return the corresponding encoded character.

To do that I decided to obtain symbols like I have done in this previous post. So the application will remove from each captcha image obtained all the noise points and leave just the clusters of points that belong to some character, write it in an image file and save the image in some directory.

This collection will be used to construct some models that will be used to recognize the symbols contained in it. So for each symbol in the collection it is necessary to construct, train and validate a model for its recognition.

PS: the set of considered symbols correspond to te upper case letters and numbers with the exclusion of the zero and O symbols (maybe because it is hard to distinguish them).

I decided to use a neural network as algorithm/structure for the construction of the models because I know them a little bit more than other Machine Learning algorithms. Moreover there are entire frameworks developed to simply the construction and training of neural networks without developing and debugging the needed algorithms and data structures. In this case I used the Encog framework, that has many models and training algorithms available.

Neural network is a supervised learning method, it means that it is necessary to provide previously labelled instances (positive and negative examples) in order to allow the training of the model. It takes different continuos values as input and provides other continuos valus as output.

The programm has been developed using one neural network for each symbol to recognize. This allow to customize the neural network when needed. A Multi Layer Perceptron network structure has been used (900 nodes as Input layer, 2 Hidden layers of 100 and 50 nodes and 1 node as Output Layer).

Each node is connected to all nodes in the upper layer and uses a sigmoid as activation function.

The input layer is composed of 900 nodes because each image has dimension 30 x 30 pixel, so a node for each pixel has been used. The value provided to each node is 1.0 if its pixel is black and 0.0 if its pixel is white.

The output layer is composed only of one node because the net is used to establish if the input image corresponds to the recognized symbol ( so ideal output value will be 1.0 ) or not ( ideal output value will be 0.0 ).

Here there is the code used to create a neural network:

nn = new BasicNetwork(); nn.addLayer(new BasicLayer(new ActivationSigmoid(), false, inputNodes)); nn.addLayer(new BasicLayer(new ActivationSigmoid(), true, 100)); nn.addLayer(new BasicLayer(new ActivationSigmoid(), true, 50)); nn.addLayer(new BasicLayer(new ActivationSigmoid(), true, outputLayer)); nn.getStructure().finalizeStructure(); nn.reset();

For the learning of the neural network it has been used the Resilient Propagation algorithm.

Here the is the code used to make the training of a neural network:

Train train = new ResilientPropagation(nn, dataset); EncogUtility.trainToError(train, error);

After that all symbols has been extracted and saved in a file, I obtained a collection of images.

I divided the collection in three ( disjoint ) sets :

- training set : it is composed by a set of directories (one for each symbol) where 7115 images has been moved based on the contained symbol. This allows to obtain some labelling about images and to use the images in each directory for the learning (training) of the associated neural network. Most images are contained in each directory and more “knowledge” is provided to the neural network, this is why this set is composed by most collection’s images;

- validation set : also in this (and the next) case 196 images have been moved in directories. But this set will be used to evaluate the neural network obtained from the training and establish if it could be used to classify images. Considering one net at time it has been created a dataset where images containing the recognized symbol are positive instances and others are negative instances. This dataset is provided to the net and the obtained result is compared with the ideal one: if values are similar the net classified correctly the instance. The percentage of correct classifications could be printed allowing to evaluate each net and decide if re-construct it or include more examples in the training set;

- test set : in this case the set of 494 labelled images is used only for the evaluation of the nets obtained, while the validation set is used to controll if a net is acceptable or not, and it allows to construct and edit each net as many times as you want/need, the test set should be used to provide a final evaluation of the labelling capabilities of the net.

Each of this set of images (and hierarchy of directories) has been stored in a directory with the name of the corresponding set.

Moreover it is possibile to use all trained neural networks for an automate labelling of a set of images: given the set of directories (one for each symbol) each image is provided as input of all networks and the results are compared. The net that returns the highest output ( greater than 0.5, if any ) is considered as it recognizes the provided image and the corresponding symbol is returned.

When an image is labelled it is moved inside the directory of the associated symbol. Otherwise it stays in the main directory.

Because there are a lot of images to classify and each one has to be compared with all neural networks, it isn’t efficient to load nets from file each time it is needed. This is why they have been cached in an HashMap, associating each one the recognized symbol and retrieved when needed.

HashMap<NeuralNetwork, String> netMap = new HashMap<NeuralNetwork, String>();

During the learning phase, each neural network requires just some minutes and few iterations to converge to a low error rate ( less than 0.5% in this case ).

Just some symbols (B, E, F, H) requires hundreds of iteration and in some case there is no conversion. In these cases different net structure has been adopted increasing the number of nodes in the hidden layers.The evaluation of the validation set and the test set is very fast both for the fetching of the neural networks and also because small models could compute very fast the output value (it is just amatter of sums and multiplications).

The images contained in the test directory has also been used to control empirically what a 98% of average correct classifications means. The images has been putted out of the directory “classification” and they are moved in the directory associated to the neural network that “recognized” the symbol contained.

After that all images has been processed, some of them could stay in the main directory because there wsn’t any neurla network that recognized them and returned an high output value. Other images has been moved in the directory of the neural network that recognize them with the highest output value.

Looking inside each directory it is very simple to note which images has been labelled correctly and which one wrongly.

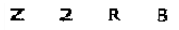

As you could see classification is not always correct, there are some mistakes due to the similarity of some symbols ( e.g like 2 and Z, or 1 and I ) some of them could be committed even by a human because the alteration applyed to the characters is too high to be indistinguishable.

However most of them has been recognized and it could be applied to solve the captchas which symbols belong to.

Here there is the eclipse project with the sourcecode, the dataset used and all directory hierarchies.

Here there is the set of images that I used to extract symbols and create images used during the training, validation and test phase.